Why an 88% hallucination rate is acceptable to Google — and to Apple

An 88% hallucination rate would be unacceptable in almost any other domain:

- A medical device that was wrong 88% of the time would be banned.

- A navigation system that invented roads 88% of the time would be recalled.

- A financial model that hallucinated facts 88% of the time would be unusable.

Yet that number now exists in public analysis of Google Gemini.

So the question isn’t whether the number is bad. It obviously is.

The real question is: why is this acceptable anyway — not just to Google, but to Apple as well?

1. The number itself

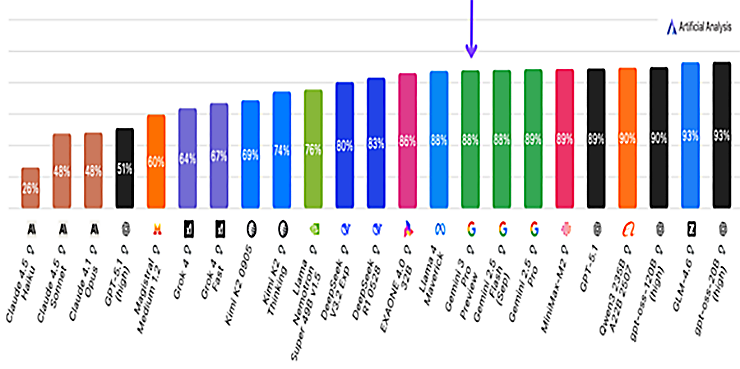

Independent testing and third-party evaluations show Gemini hallucinating at roughly 88% under certain structured factual tasks.

- That does not mean Gemini is “wrong all the time.”

- It means that when asked to provide verifiable, grounded answers, it frequently produces confident but untrue output.

That distinction matters — because confident error is more dangerous than visible uncertainty.

This is not a tuning issue.

It is not a prompt issue.

It is not a “next release will fix it” issue.

It is a systems problem.

2. Why Google accepts this

Google accepts this failure rate for a simple reason:

There is no internal mechanism that forces the system to slow down, qualify itself, or refuse confidence when evidence is missing.

In other words, Gemini is allowed to sound sure even when it is guessing.

That isn’t negligence. It’s architecture.

Modern LLMs are optimized for:

- fluency

- responsiveness

- plausibility

They are not optimized for:

- epistemic restraint

- confidence hygiene

- governed uncertainty

Absent a governance layer, hallucination is not a bug — it is an emergent property.

3. Why Apple accepts this too

Here’s the part that should worry people.

Apple plans to integrate Gemini into Siri in spring 2026.

Apple knows this data. Apple understands the risk.

So why proceed?

Because Apple is making a calculated tradeoff:

- limit scope

- constrain surface area

- rely on UX guardrails

- avoid deep memory or longitudinal reasoning

In plain terms: Apple is choosing containment, not correction.

That strategy reduces visible harm, but it does not fix the underlying failure mode:

confident output without evidence.

The hallucination risk doesn’t disappear. It’s just hidden behind cleaner UI.

4. What’s actually missing: a governance layer

What neither Google nor Apple currently deploys is a governance layer that regulates confidence itself.

Such a layer would enforce a simple rule:

If output is not grounded in empirical data, the system must either

(a) qualify its uncertainty,

(b) ask for more information, or

(c) recommend actions that generate new data.

This is not content moderation.

This is not safety theater.

This is decision hygiene.

Without it, models will continue to hallucinate — because nothing tells them when not to sound sure.

An 88% hallucination rate is therefore “acceptable” only because nothing in the system disallows it.

5. Notice and freedom to operate

I have already filed intellectual property covering this class of governance mechanisms with the United States Patent and Trademark Office.

That matters.

Because once a governed confidence layer is described, disclosed, and timestamped, every major AI vendor must ask a basic question:

Do we have freedom to operate if we implement something similar?

Google, Apple and others are free to ignore the problem — until they decide they can’t.

At that point, governance stops being optional.

The uncomfortable conclusion

An 88% hallucination rate isn’t acceptable because it’s safe.

It’s acceptable because the industry has not yet forced itself to say:

“You are not allowed to sound confident unless you’ve earned it.”