Unlike today’s AI, AI 2.0 will make dollars and sense

First, AI must make dollars and sense in two ways:

Before AI can be trusted, governed or widely adopted, it has to work as a business. Today it doesn’t. The economics are broken on both sides of the ledger: revenue is mispriced and costs are uncontrolled.

1. Revenue generation requires metered compute

AI cannot be sold sustainably via flat-rate subscriptions or tokens as proxies for compute. Those models guess at value instead of measuring it.

Metered compute ties revenue directly to what actually runs, allowing pricing to reflect real usage, real value and real demand. Without accurate metering, AI cannot scale profitably—no matter how impressive the model appears.

2. Cost control requires pre-execute provisioning

Most AI systems execute first and discover cost later. That guarantees waste. Pre-execute provisioning allocates compute before a task runs, based on what the task actually requires.

By preventing over-allocation at the source, this approach can eliminate the majority of unnecessary inference spend—cutting compute costs by as much as 90%. Revenue metering and cost control must happen together, in the same place, because they are inseparable economic functions.

Second, AI must become useful to users in two ways:

Once AI works economically, it still has to work for people. When adoption fails, it isn’t because models aren’t powerful enough. It fails because users don’t trust LLMs.

1. Loss-less memory is required for trust and adoption

LLMs today do not forget safely, do not remember reliably, and do not improve based on governed, verified user input. Instead, they rely on Retrieval-Augmented Generation (RAG) to compress, discard and hallucinate their way around finite memory limits. Those limits are real and unavoidable. They cannot be iterated away.

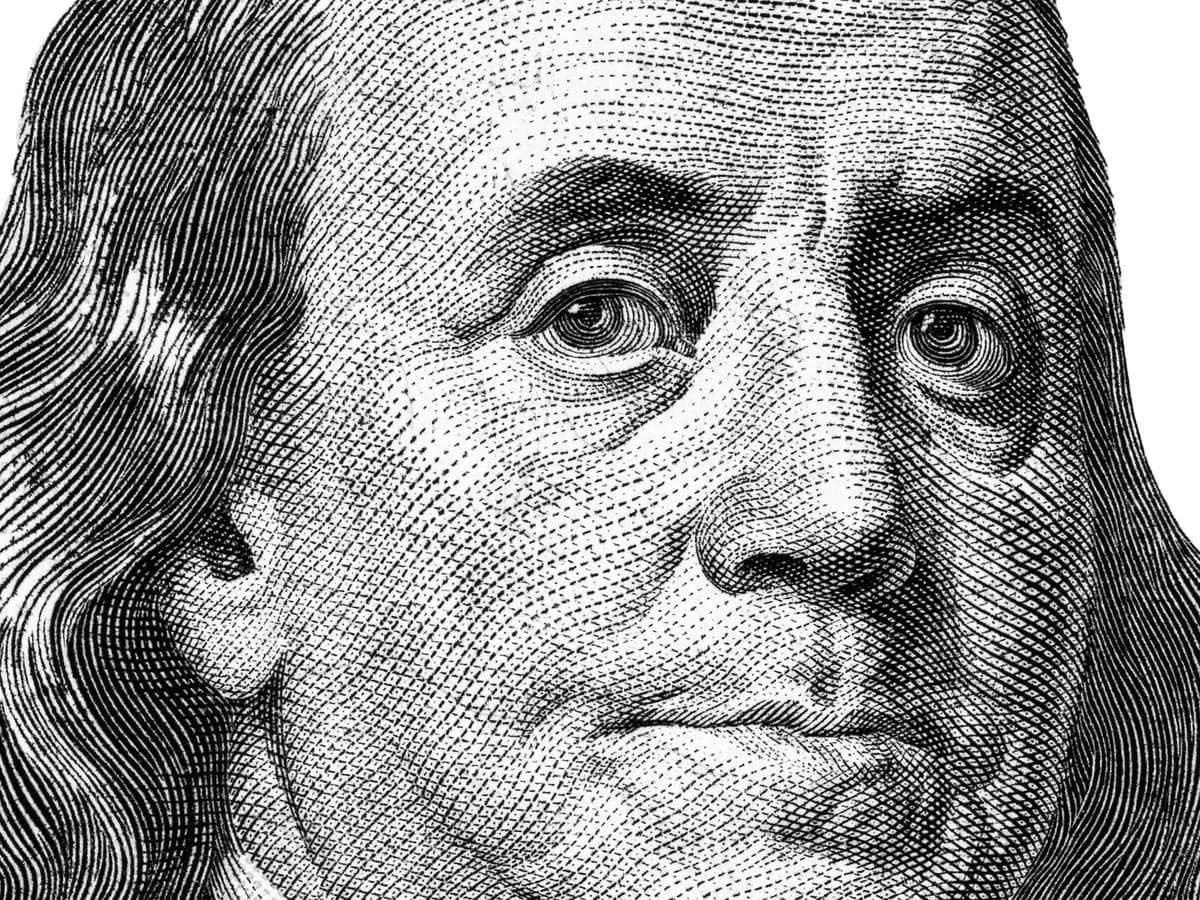

This workaround looks familiar. LLMs survive finite memory the same way JPEGs survived slow networks: through lossy compression. JPEGs throw away pixels. LLMs throw away facts. And at first glance, the loss is easy to miss.

But look closely at a JPEG and the damage becomes obvious: blurred edges, missing detail, compression artifacts that weren’t visible at first.

Look closely at an LLM’s output and the same pattern emerges. The seams show. The missing facts surface. The confident answer masks an incomplete or distorted underlying record. Once you notice those gaps, you understand what the system has really lost.

What JPEGs lose are pixels.

What LLMs lose is truth.

Without reliable memory, AI cannot be trusted. Without trust, AI cannot scale. And without scale, the market caps tied to AI infrastructure evaporate.

Users will not trust an agent that doesn’t remember. When memory is lossy or ephemeral, every interaction effectively resets. Context disappears. Confidence never accumulates. Users are forced to repeat themselves, recheck outputs, and treat the system as disposable rather than dependable.

Memory must become 100% loss-less. It is not a feature. It is the prerequisite for iterative learning and for any durable relationship between a user and an AI system.

2. User-controlled governance enables personalization

User-controlled governance is not an alternative to platform safety—it works alongside it. LLM providers will and should continue to enforce top-level governance around non-negotiable issues such as self-harm, illegality and systemic abuse. That outer layer defines what AI must never do.

But inside that boundary, users need the ability to customize how the system behaves for their work.

If you hate Oxford commas, you should be able to tell the system once and never see them again.

If you want concise answers, cautious language or a specific analytical style, those preferences should persist.

User-controlled governance gives people tuning pegs inside the safe envelope: a way to customize behavior, set boundaries and trust the system to honor those choices consistently. That’s how safety and personalization coexist—and how AI becomes genuinely usable.

Joni Mitchell never accepted a guitar as it was handed to her.

She tuned it.

Then she tuned it again.

And again.

Over her career, she used dozens of custom guitar tunings—some so unconventional they barely resembled standard tuning at all. One was nicknamed California Kitchen Tuning. Listen, below.

She didn’t fight the instrument.

She reshaped it.

That’s how great tools work. They don’t impose themselves on the user. They invite collaboration.

That’s exactly what’s missing from AI today.