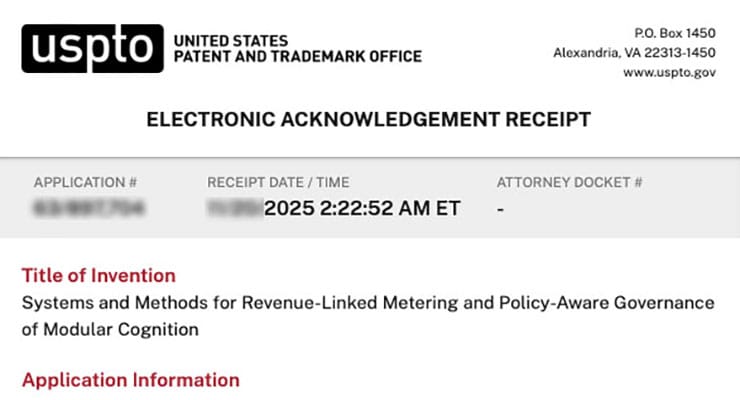

Systems and Methods for Revenue-Linked Metering and Policy-Aware Governance of Modular Cognition

By Alan Jacobson | RevenueModel.ai

Software and online services have historically relied on a small number of familiar revenue models. Early enterprise software was typically sold as perpetual licenses with maintenance contracts. As delivery moved to the web, subscription models became dominant: customers paid a fixed monthly or annual fee per seat or per account, regardless of exact usage. Consumer-facing platforms often adopted advertising-supported models, in which user attention and data are monetized indirectly through targeted ads. Marketplaces and app stores layered transaction fees, revenue share, and in-app purchases on top of these models.

Cloud computing further shifted revenue from static licensing to metered consumption. Infrastructure providers began charging for compute, storage, bandwidth, and ancillary services based on actual usage rather than fixed capacity. These usage-based models price at the level of low-level resources (CPU time, memory, disk, network) and expose those metrics via detailed invoices and dashboards. Enterprises increasingly mix fixed commitments (reserving some baseline capacity) with pay-as-you-go bursts, often under long-term contracts with negotiated discounts.

With the emergence of large language models and other generative AI systems, providers have broadly adopted a similar consumption-based approach, but with new value metrics. For text and multimodal models exposed via APIs, the dominant billing unit is a “token,” roughly corresponding to a short fragment of text. Commercial providers such as OpenAI, Anthropic, and Google publish price lists denominated in cost per thousand or per million tokens, often differentiating between input tokens and output tokens and between model families and context window sizes.

Pricing comparisons across leading models show the competitive landscape is framed primarily in terms of token rates. For example, public analyses normalize provider offerings to dollars per thousand tokens and compare tiers such as “small,” “standard,” and “premium” models by effective cost per unit of text processed. This reinforces tokens as the basic economic measure of generative AI usage.

In addition to token-based APIs, some providers offer AI capabilities as features inside broader SaaS products. These offerings often use hybrid pricing: a base subscription (for seats or workspaces) combined with metered charges for AI features, such as numbers of AI actions, generated documents, processed images, or minutes of audio. Industry playbooks on “usage-based AI pricing” describe this as a way to map pricing more tightly to perceived value, allowing customers to “pay for what they actually need” while vendors offset variable AI compute costs and maintain margins.

This evolution has led to a monetization landscape in which three model families dominate for AI-enabled services:

- Pure subscription: fixed recurring fees for access to AI functionality, often with soft or implicit usage caps.

- Pure consumption: pay-per-use models based on tokens, API calls, documents processed, or similar quantitative metrics.

- Hybrid subscription-plus-consumption: a base subscription combined with metered overages or pooled allowances of AI credits.

At the same time, a separate category of “monetization infrastructure” has emerged to support these usage-based models. Specialized billing and metering platforms, including Metronome, Amberflo, Lago, and other usage-billing systems, position themselves as infrastructure for modern consumption-based pricing. These platforms provide tools to:

- Capture fine-grained usage events (for example, API calls, tokens, compute minutes) in real time.

- Rate those events according to configurable pricing rules (tiers, volume discounts, minimums, pre-purchased credits).

- Generate invoices and usage statements.

- Integrate usage and revenue data with enterprise finance systems.

Industry discussions emphasize that metering itself is a critical technical challenge, separate from billing. Engineering reports on implementing AI credit systems note that reliable, low-latency usage tracking is “core infrastructure,” and that failures in metering can break both pricing and customer trust.

Finance and accounting practitioners have begun to address the implications of usage-based and AI-driven revenue for revenue recognition and audit. Guidance aimed at CFOs and controllers explains that usage-based pricing greatly increases the volume and variability of revenue events, creating complexity under standards such as ASC 606. Articles on audit risk highlight “revenue recognition based on user behavior without a clear audit trail” as a red flag and note that many organizations now rely on AI or automated systems to decide when and how revenue is recognized from usage logs. The proposed remedies focus on better event data, reconciled metering, and systems capable of producing evidentiary records for finance audits.

Academic work has also begun to examine AI pricing as an economic design problem. Recent research in mechanism design, such as the paper titled “Token is All You Price,” models a generative AI platform that screens heterogeneous users and shows that, under its assumptions, a single aligned model combined with token caps can implement the revenue-optimal mechanism. This line of work treats tokens and token limits as the central instrument for extracting revenue and managing user behavior, separate from broader questions of safety, regulatory compliance, or long-term memory.

In parallel, large enterprises and SaaS vendors experiment with outcome-linked pricing for AI-enabled offerings. In these arrangements, fees are sometimes tied to business metrics such as cost savings, lead conversion, or workflow throughput, rather than only to raw usage. Advisory firms describe “outcome-based pricing” as one of several patterns in AI-enabled SaaS contracts and focus on how such structures affect revenue recognition and performance obligations. However, even in these models, billing generally remains anchored to contractual fee schedules, milestones, or usage counts, rather than to any explicit notion of governed AI behavior.

Despite this activity, several common characteristics of the current state of AI revenue can be observed:

- The primary unit of monetization is a usage metric such as tokens, requests, compute time, documents processed, or similar quantitative measures of activity.

- Pricing and billing systems typically treat safety policies, content governance rules, and user-protection measures as out-of-band concerns. The billing infrastructure meters what occurs, but does not natively distinguish between safe and unsafe usage.

- Audit trails are primarily designed to support financial reporting, revenue recognition, and dispute resolution around invoices, rather than to provide a unified evidentiary record of how AI systems behaved under applicable laws, regulations, and internal policies.

- Vertical and regulatory contexts—such as healthcare privacy rules, child-protection statutes, or financial-services regulations—are often handled through product configuration, contractual commitments, or separate compliance tooling, not through the definition of the revenue unit itself.

As a result, there is a structural gap between how AI usage is monetized and how AI behavior is governed. Existing revenue models and billing infrastructures are well-suited to counting and charging for tokens, requests, or generic “AI actions,” and they increasingly provide detailed financial audit trails. However, they generally do not treat a governed AI interaction—one that is evaluated against policies, constrained by regulatory regimes, and instrumented with safety decisions and supporting evidence—as the fundamental economic unit.

This separation makes it difficult for operators, regulators, and other stakeholders to answer, from a single, coherent record, questions such as:

- What exactly was the AI system asked to do in a particular interaction?

- What data, memory, and external tools did it access?

- What policies and regulatory rules applied, and how were they enforced?

- What did the system allow, deny, abstain from, or escalate to a human, and why?

- How was that interaction monetized, and what revenue was recognized from it?

Current approaches typically require correlating multiple independent logs and systems—model telemetry, safety filters, policy engines, metering pipelines, billing platforms, and finance ledgers—to reconstruct the behavior and economic impact of AI systems in specific cases. That reconstruction is complex and fragile, particularly at the scale and event volume associated with modern generative AI deployments.

This is the complete BACKGROUND section of the SPECIFICATION. The entire SPECIFICATION is available for inspection under NDA after remit of EVALUATION FEE.