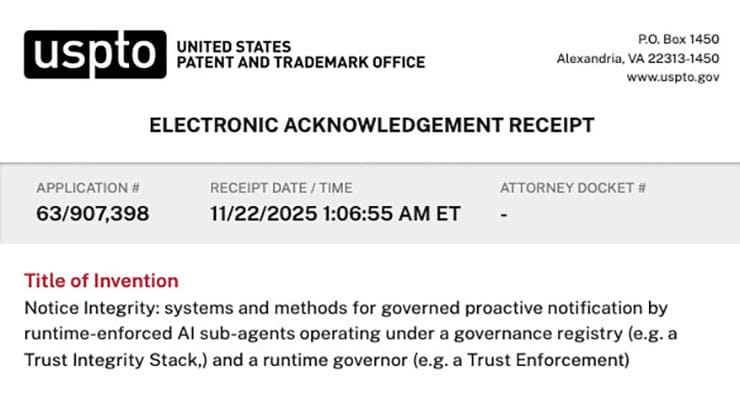

Notice Integrity: systems and methods for governed proactive notification by runtime‑enforced AI sub‑agents operating under a governance registry (e.g., a Trust Integrity Stack) and a runtime governor (e.g., Trust Enforcement)

By Alan Jacobson | RevenueModel.ai

Artificial intelligence systems increasingly engage users across health, finance, education, transportation, home automation, and personal productivity. As these systems shift from reactive tools to autonomous agents capable of anticipating needs, there is a growing requirement for proactive notifications that are not merely triggered—but governed. No matter the domain, the fundamental principle remains: the user should be the administrator of their notifications, except in narrow categories where public‑safety policy or platform‑level safeguards supersede user control.

Existing Notification Systems and Their Limitations

Consumer ecosystems already include partial notification capabilities. Apple’s Siri sets alarms and reminders; Android provides notification channels; Alexa routines enable conditional behaviors; and smartphones deliver non‑blockable emergency alerts, such as AMBER alerts or imminent weather warnings mandated by public‑safety frameworks. Some AI assistants and LLM platforms have begun introducing hard‑wired alerts for events they deem to be in a user’s best interest. These represent the current state of the art: fragmented, inconsistent, and governed by a mix of device rules, app settings, platform judgments, and statutory exceptions.

However, even with these systems, the following limitations remain:

- Outside of public‑safety mandates and limited platform‑level exceptions, users cannot define governance policies such as risk thresholds, contextual suppression, cross‑domain rules, or audit requirements.

- Existing AI assistants may provide “best‑interest” hard‑wired alerts, but there is no unified or transparent governance model.

- Reminders and alerts fire without evaluating value, risk, timing, or context.

- Critical alerts lack immutable records documenting why an alert was sent, what triggered it, and whether it passed any policy gate.

- No cross‑domain governed notification layer exists that unifies urgent alerts, benign proactive help, user‑defined preferences, AI‑inferred needs, and policy‑based conditions.

There is no proactive notification system in which:

- The user governs all non‑mandatory and non‑platform‑critical alerts.

- Platform‑level or statutory alerts remain intact and clearly disclosed.

- Every notification passes a governance and context evaluation before sending.

- Routine and high‑risk alerts follow the same accountable framework.

- Every event generates an immutable receipt.

- Overrides, suppressions, and escalations respect user preference except where legally restricted.

As AI systems take on greater autonomy, a governed proactive notification framework is required—one in which:

- Users define what non‑mandatory alerts they want and when.

- Platform‑level “best‑interest” notices and statutory alerts remain intact and clearly disclosed.

- The system evaluates each potential notification through policy, context, stored intent, value, and risk.

- Alerts—urgent or routine—produce a tamper‑proof receipt.

- Cross‑domain signals are processed by a single governed layer.

- The user remains the administrator for all notifications that are not legally mandated or platform‑critical, while GPN remains compatible with both categories.

This is the complete BACKGROUND section of the SPECIFICATION. The entire SPECIFICATION is available for inspection under NDA after remit of EVALUATION FEE.