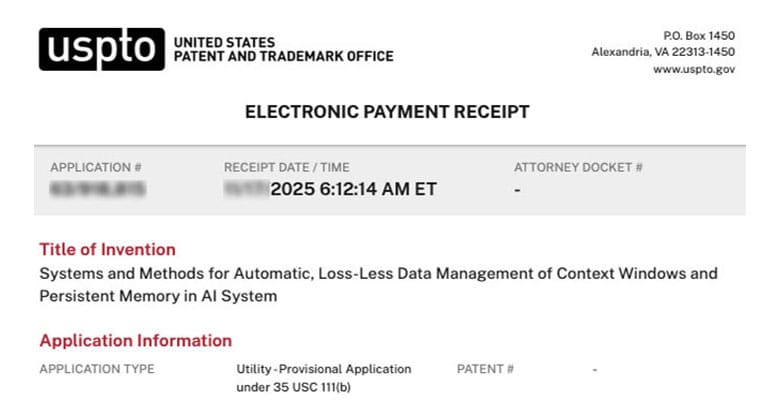

Systems and Methods for Automatic, Loss-Less Data Management of Context Windows and Persistent Memory in AI System

By Alan Jacobson | RevenueModel.ai

Modern artificial intelligence systems, particularly large language models (LLMs), remain fundamentally constrained by how they manage, retain, and retrieve information across time. Although these systems are capable of producing fluent, context-aware output within a single conversation, their ability to sustain continuity, accuracy, and coherence across extended interactions is limited by architectural factors inherited from earlier generations of machine learning systems.

The most significant constraint is the reliance on a bounded context window. An LLM’s usable working memory is defined by the number of tokens it can attend to at once, and all reasoning must occur within this window. As interactions accumulate, older information must be truncated, summarized, or discarded to make room for new input. This creates a brittle foundation for any task requiring continuity across long-running workflows, high-stakes decisionmaking, multi-session collaboration, longitudinal personalization, or environments demanding accuracy across evolving sequences of facts.

As a result, existing systems routinely lose critical details, forget prior constraints, and mismanage dependencies once the conversation exceeds the available working memory. This creates well-documented vulnerabilities: incoherence, hallucinations, contradictory statements, inconsistent behavior, and loss of important user-provided information. To compensate, developers and users are forced to repeatedly re-supply information the system should conceptually retain, driving up cost, latency, and user burden.

Because the default behavior of most deployed models is stateless, nearly every persistence mechanism in the commercial and research ecosystem has been bolted on rather than built in. These mechanisms fall into several categories:

Working memory extensions that attempt to manage the context window more efficiently by trimming or summarizing older turns to preserve coherence at the edges of the window. These methods often collapse under long or complex workflows because summarization can be lossy, error-prone, or insufficiently structured.

Retrieval-augmented generation (RAG) systems that store documents, prior interactions, or vectorized summaries in an external datastore. At inference time, relevant items are retrieved and reinserted into the prompt. While this provides the illusion of increased memory, most implementations treat stored content as static text rather than as structured knowledge with provenance, authority, temporal relevance, or dependency relationships. RAG also remains fundamentally lossy: information must be chunked, embedded, and reassembled, and important details can be dropped or misweighted.

Agent-oriented memory frameworks that incorporate episodic, semantic, or reflective memory subsystems. These systems may store transcripts, preferences, entities, or high-level summaries, but they typically rely on heuristic policies for deciding what to remember or forget. Most do not guarantee that stored information is complete, accurately scoped, or internally consistent across time.

OS-style virtual memory analogues, such as MemGPT, that introduce multi-tiered memory management and swapping strategies. These systems demonstrate that structured memory layers can extend usable context, but they depend on heuristics or manually defined policies for summarization, forgetting, and priority ordering. They also tend to treat memory as a task-state artifact rather than as a durable, governed resource.

Commercial assistant memory features introduced by major AI providers, which allow users to request that certain facts be “remembered.” These systems expose persistent memory directly to end users, but often without robust governance, auditability, conflict handling, or protection from storing incorrect or unsafe information. Public reporting has highlighted concerns around privacy, intrusive recall, and unexpected resurfacing of sensitive content.

Across these categories, several structural limitations persist:

- Lossy storage and retrieval. Many memory systems sacrifice information fidelity due to summarization, embedding, or chunking. Once compressed or abstracted, the original detail cannot be reconstructed reliably.

- No standardized mechanism for dependency tracking. Current memory systems rarely store or reason over causal, hierarchical, or temporal dependencies. As a result, when information is recalled, the system may not understand whether upstream or downstream facts remain valid, authoritative, or mutually consistent.

- Lack of integrated policy governance. Existing frameworks typically leave privacy, safety, compliance, and retention rules to application-level implementers. They do not enforce standardized guardrails for regulated domains such as healthcare, finance, education, aviation, or minors’ safety.

- Fragmentation between working memory and long-term memory. Context windows operate in one paradigm (immediate, limited, transient), while persistent stores operate in another (external, potentially unbounded, loosely structured). These two layers are rarely integrated into a unified, coordinated system capable of loss-less transitions between them.

- No coherent strategy for multi-session continuity. Users working across multiple days or weeks must repeatedly re-educate their AI systems because current memory solutions lack automatic, governed, and reliable mechanisms for carrying relevant state forward.

- Economic inefficiency. Re-supplying long histories inflates token usage and latency. Conversely, embedding or summarizing large histories creates storage and retrieval overhead. Existing systems disproportionately shift cost and friction onto users and developers.

- Inconsistent reliability at scale. As interaction histories grow, unstructured memories accumulate noise, conflicting entries, duplicated facts, and outdated assumptions. Without standardized mechanisms for provenance, versioning, conflict resolution, and drift correction, persistent memory becomes less reliable over time.

- Lack of a unified theoretical model. Current work spans RAG, memory networks, episodic controllers, reflective systems, and commercial memory features, but these approaches do not converge on a shared framework for data integrity, consistency, safety, or continuity across the short-term/long-term memory boundary.

As persistent memory becomes mainstream—appearing in commercial assistants, developer frameworks, and enterprise systems—the shortcomings above become more pronounced. Users expect AI systems to remember accurately without losing detail, to maintain continuity without contradiction, and to integrate information across sessions without forcing users to restate known facts. Enterprises and regulated industries require even more: auditability, reproducibility, policy compliance, verifiable data provenance, and reliable integration between ephemeral working memory and durable long-term memory.

Despite significant progress in the field, the underlying challenge remains unresolved: existing systems lack a unified, loss-less, automatic, and governed method for managing information across the context window boundary while maintaining continuity, correctness, dependency integrity, and safety across time.

This gap in the current state of the art creates the need for improved systems and methods capable of reliably managing short-term and long-term memory in AI systems, maintaining information fidelity across memory transitions, and supporting safe, auditable, long-duration collaboration with users.