Rebuilding trust in AI and America with an “Elegant Solution”

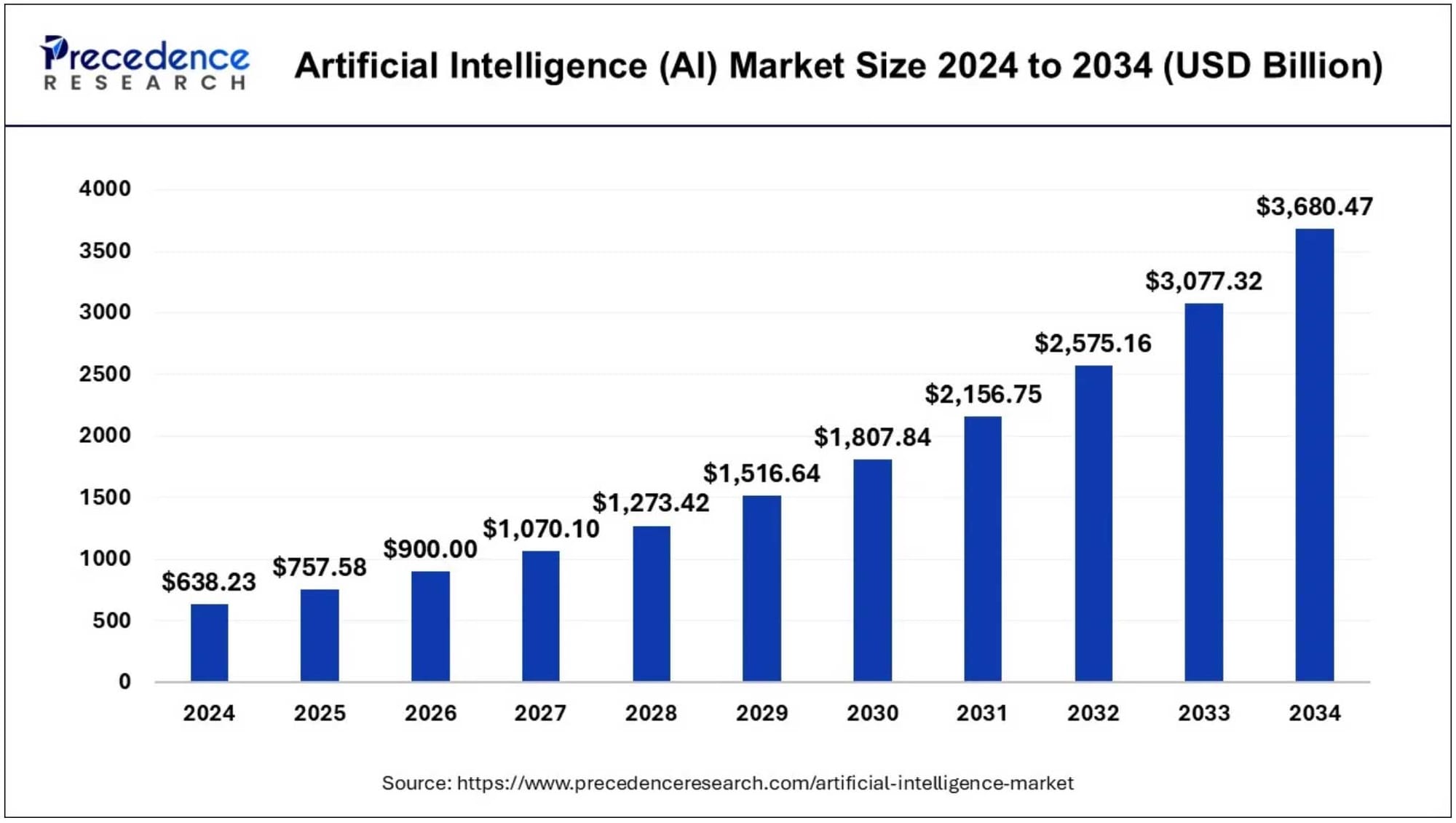

For months, almost everyone in AI made the same mistake: They trusted the projections instead of the data.

Every chart pointed up. Every forecast curved skyward. Even the “conservative” projections showed clean diagonal growth — not a hockey stick, but something close enough to imply inevitability.

But when I pulled the real numbers — not the projections — I found something else entirely.

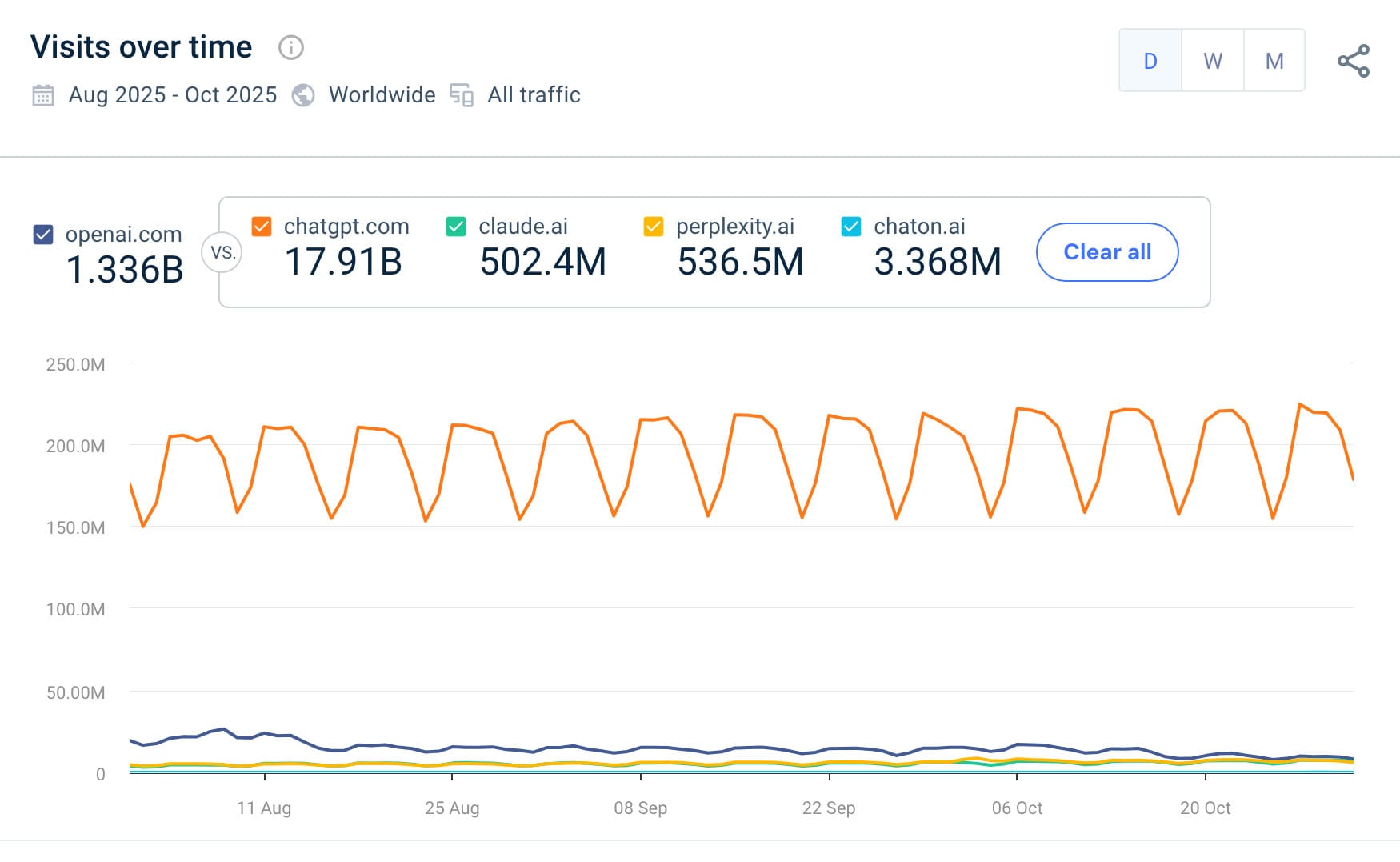

AI growth has been flat for four months.

Not slowing down. Flat.

Across all the major LLMs.

Across web traffic and app downloads.

A stall, not a surge.

Everyone believed the projection. Almost no one checked the pulse.

I did.

And the truth turned out to be structural, not cosmetic:

No memory → no trust → no adoption → no scale.

Users can’t build trust with a system that forgets everything.

And a system that forgets everything will never reach scale.

If/when the bubble bursts, it won't be because AI failed. It will be because trust failed.

And that’s not just an AI problem.

America has the same problem.

A crisis of trust — in AI and in America

We’re living in two overlapping trust failures:

- A technological trust failure — AI tools that forget, hallucinate and cannot be relied on.

- A civic trust failure — a press that has been hollowed out, underfunded and unable to meet the moment.

You can’t have a healthy democracy without a trustworthy press.

You can’t have a scaled AI ecosystem without trustworthy memory.

These two failures look separate.

They’re not.

They’re the same failure, wearing two different masks.

And that leads us to the Elegant Solution.

What designers mean by an “Elegant Solution”

Webster’s will tell you an elegant solution is “clean, simple, spare.”

A designer will tell you something else entirely.

Designers use the phrase “elegant solution” to describe a very specific phenomenon:

Using one problem to solve another problem.

Problem A becomes the mechanism that solves Problem B.

It’s not “killing two birds with one stone,” which means one action solves two problems.

This is different.

An Elegant Solution means Problem B becomes the key that solves Problem A:

It’s structural.

It’s architectural.

It’s beautiful.

The classic example is Daniel Cudzik’s 1975 “Sta-Tab” — the modern soda can top.

- One piece of aluminum.

- No separate pull tab.

- No litter.

- No added parts.

The problem (a sealed can you cannot open) becomes the device that solves it.

The tab itself becomes the lever that opens the can.

That is Elegant.

And that is exactly what we need now.

The Elegant Solution for AI and America

AI’s problem is trust.

America’s problem is trust.

Both failures come from the same underlying collapse: We have no institutions — civic or technological — that hold memory with integrity.

So what do you do?

You use one trust problem to solve the other.

You take the licensing revenue from solving the memory-and-governance problem in AI — and you use it to fund 1,414 new local online newsrooms across the United States.

You fix AI’s trust problem.

And the fix generates the revenue to rebuild America’s trust problem.

Problem A solves Problem B.

That’s the Elegant Solution. It’s the Sta-Tab at national scale.

What comes next

When a bubble bursts, most people look for a culprit. But the real question — the only question — is:

What comes next? Here’s the answer:

AI companies will need governed, indelible memory if they want trust and scale.

- That architecture already exists and is already filed with the patent office.

- Licensing that architecture generates a funding engine for public-good journalism.

- Trust in AI goes up.

- Trust in America goes up.

- The two reinforce each other.

Trust rebuilt from both ends — the civic layer and the computational layer.

That is what elegant solutions look like:

Not merely concise.

Not merely graceful.

Not merely clever.

Just true.

And that’s what comes next.