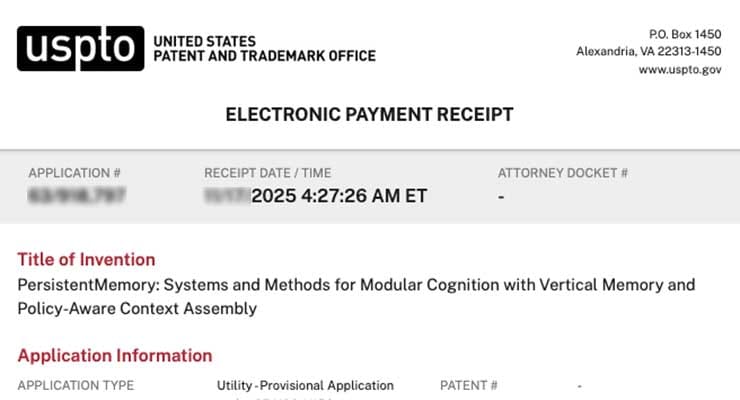

PersistentMemory: Systems and Methods for Modular Cognition with Vertical Memory and Policy-Aware Context Assembly

By Alan Jacobson | RevenueModel.ai

Modern large language models (LLMs) and conversational AI systems remain fundamentally limited by the way they handle memory across time. Out of the box, most LLMs operate as stateless systems: they remember only the portion of an interaction that fits within the model’s context window, and all information outside that window is truncated, summarized, or forgotten unless explicitly re-provided by the user. This constraint is inherited from transformer architectures themselves, which require the full input sequence to be represented within a bounded token limit for attention to operate. As a result, the system’s “working memory” resets whenever the context window overflows.

This leads to well-known problems: repeated questions, brittle personalization, drift, contradictory statements, and hallucinations that arise when the system loses track of previous facts or constraints. These issues intensify in long-running projects, multi-session work, safety-critical environments, or any domain requiring continuity, context retention, or consistency across days, weeks, or months.

To compensate for these limitations, researchers and practitioners have introduced a variety of external memory systems, most of which fall into one of the following categories:

- Retrieval-Augmented Generation (RAG) as De Facto “Memory”

RAG has become the most widely adopted solution for adding external information to LLMs. It works by:

- embedding documents, transcripts, or stored knowledge

- indexing them in a vector database

- retrieving the nearest matches during inference

- injecting retrieved snippets back into the prompt before generation

Because of its popularity, many advanced practitioners—including machine-learning leads, enterprise architects, and AI power users—have come to believe that RAG “solves” the memory problem.

However, despite its usefulness, RAG introduces several limitations that prevent it from functioning as true long-term memory:

- Retrieval issues such as missing content, irrelevant documents, outdated materials, or noisy embeddings

- Generation issues including hallucinations, incomplete synthesis, incorrect formatting, or lost specificity

- System-level issues including latency, scalability bottlenecks, and the challenge of maintaining high-quality corpora

- Lossy storage due to chunking, compression, and embedding, which cannot guarantee fidelity

- Lack of continuity, because retrieved data does not represent the AI’s evolving state, past decisions, or multi-session history

- No durable user profile, only ad hoc retrieval

- No handling of dependencies, timing, or causalityNo fine-grained or governed forgetting mechanisms

- High user and developer overhead for building and maintaining the underlying infrastructure

- Difficulty handling sensitive information, regulated data, or mixed personal–enterprise contexts

- No integrated structure for persistent identity or cross-session context

RAG improves a model’s reach, but it does not provide memory. It provides retrieval.

This distinction is now widely discussed in contemporary literature: retrieval systems supply information “for now,” but they do not maintain structured, evolving, long-lived context “for always.”

- Exposed Memory Features in Commercial Assistants

Recent versions of mainstream AI assistants (e.g., ChatGPT, Claude, Gemini) have introduced user-facing memory panels that allow the system to remember facts, preferences, or recurring instructions. These systems represent a significant shift: users now expect an AI to retain long-term information without manual re-teaching.

However, current commercial memory implementations still exhibit important limitations

- Memory is stored as isolated facts, not organized structures

No contextual grouping—memories are not linked to projects, domains, tasks, or narratives

Limited user agency—editing memories is technically possible but cumbersome

- No unified schema across different types of stored data

- High friction for managing, reviewing, or organizing long-term memories

- No mechanism for dependency resolution, time-aware reasoning, or decay

- No reliable method for continuity across long-running workflows

- No system-level guarantee of fidelity, as memories may be summarized or compressed

- No safety constraints preventing sensitive or incorrect information from being stored

- Unpredictable resurfacing of memories, raising user concern about overrecall or inappropriate recall

- Lack of support for enterprise compliance (e.g., retention, redaction, audit)

- No automatic or structured integration with internal model reasoning

These memory features are promising but represent early-stage attempts rather than mature, reliable persistent memory systems.

- Reflective, Episodic, and Semantic Memory Research

Academic and industrial research has explored multiple forms of memory for LLMs:

Episodic memory: storing prior turns, events, or interactions

- Semantic memory: storing stable preferences or facts

- Procedural memory: storing workflows, routines, or tool-use strategies

- Reflective memory: systems that periodically re-examine history and summarize key points

- OS-style tiered memory, such as MemGPT, which uses interrupt-driven reorganization

- Episodic controllers such as Larimar, focusing on sparse, addressable memory updates

- Production frameworks like Mem0, which emphasize cost structure and indexing efficiency

All of these research directions acknowledge the need for memory that extends beyond a single session.

But each exhibits unresolved gaps:

- heuristic filtering of “important” information

- lossy summarization

- difficulty maintaining consistency as histories accumulate

- lack of standardized evaluation metrics

- limited ability to reconcile conflicting memories

- no unified approach to timeline, provenance, or authority

- no automatic mechanism for integrating short-term and long-term memory

- absence of structured, durable, loss-less storage

- no cohesive strategy for multi-session continuity across contexts or domains

- Fragmentation Between Context Window and External Memory

Despite these innovations, the core architectural problem remains unresolved:

the context window and external memory operate as separate, incompatible systems.

The context window is bounded, ephemeral, and token-limited.

External memory is unbounded, persistent, but loosely organized.

Today’s systems provide no unified mechanism for:

- moving information between these layers without loss

- preserving the fidelity of past information over long durations

- maintaining coherence as interactions span multiple sessions

- automatically determining what should be retained long-term

- ensuring that retrieved information reflects current relevance or validity

- supporting continuity across projects, domains, or complex workflows

- reducing user burden by eliminating repetitive explanation

- avoiding drift as the system accumulates fragmented memories

As a result, users experience:

- discontinuity

- inconsistent personalization

- memory bloat

- contradictions

- forgotten constraints

- incorrect recall

- frequent re-explanation of prior context

- fatigue in managing or correcting the AI’s memory

Summary of the State of the Art

The state of persistent memory in AI can be summarized as follows:

RAG improves retrieval, not memory,

- Commercial assistant memory features remain rudimentary and user-friction-heavy.

- Academic systems provide partial solutions but lack unified mechanisms

No current system integrates short-term working memory with long-term, durable memory in a coherent, loss-less manner.

- No system provides automatic, structured, policy-aware continuity across time.

- No existing approach eliminates the need for users to continually re-teach the system.

- No architecture reliably maintains context, dependencies, or fidelity across sessions.

- No unified standard exists for organizing, governing, or retrieving persistent memory at scale.

The Resulting Need

Despite substantial research and rapid commercial evolution, persistent memory for AI remains an unsolved problem. The field lacks an automatic, reliable, loss-less method for maintaining information across sessions, resolving drift, preserving fidelity, and supporting long-duration human–AI collaboration.

A need therefore exists for improved systems and methods for managing persistent memory in AI systems in a way that is automatic, reliable, structured, durable, user-friendly, continuous, and integrally connected to both the context window and the external memory layer, without loss of information fidelity across time.

This is the complete BACKGROUND section of the SPECIFICATION. The entire SPECIFICATION is available for inspection under NDA after remit of EVALUATION FEE.