Systems and Methods for Automatic, Loss-Less Management of Context Windows in AI Systems

Large language models process language within a finite “context window,” also called context length.

The context window is the amount of text, measured in tokens rather than characters or words,

that the model can consider at one time when generating a response.

Industry explainers routinely describe the context window as the model’s short-term memory: all user input, system instructions and recent dialogue must fit inside this window to influence the next output.

See background

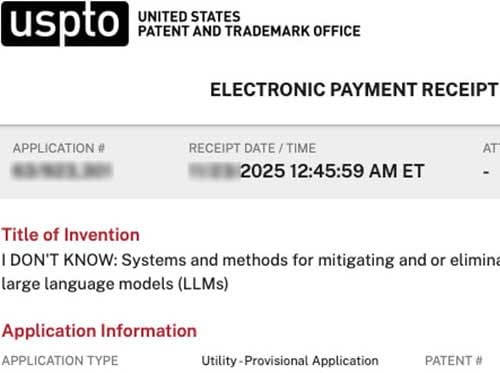

Systems and methods for mitigating and/or eliminating hallucinations by large language models (LLMs)

Modern societies depend on complex information systems to make decisions in finance, health care, law, education, transportation, logistics, defense, and everyday life. In all of these domains, there is a long-standing expectation that people and systems will acknowledge uncertainty, limits, or incapacity rather than guess. In human settings, phrases like “I don’t know” or “I can’t do that” signal an explicit boundary: the speaker is refusing to fabricate an answer or perform an action that would be unsafe, misleading, or outside their competence.

See background

Trust Enforcement (TE): User-Governed, Runtime-Enforced Constraint Layer for AI Models

The disclosure relates to the field of large-scale software systems, distributed digital services, and artificial intelligence systems that interact with users, enterprises, and regulated environments. Modern digital systems are increasingly complex, interconnected, and governed by overlapping operational, legal, and ethical requirements. As these systems scale, they exhibit failure modes that are subtle, difficult to detect, and capable of causing downstream harm before human operators become aware of the issue.

See background

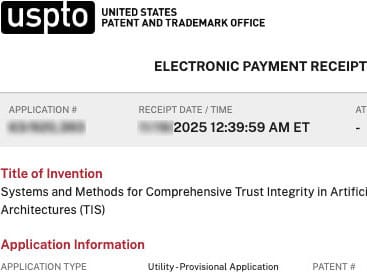

Systems and Methods for Comprehensive Trust Integrity in Artificial Intelligence Architectures (TIS)

Artificial intelligence (AI) and machine learning (ML) systems are increasingly embedded in critical domains including healthcare, finance, education, employment, law enforcement, national security, social media, and mental health support. These systems are no longer confined to low‑stakes recommendation tasks; they make or shape decisions that can affect liberty, livelihood, safety, and reputation. As a result, regulators, enterprises, and the public are demanding stronger assurances that AI behavior is trustworthy, governable, and accountable over time.

See background

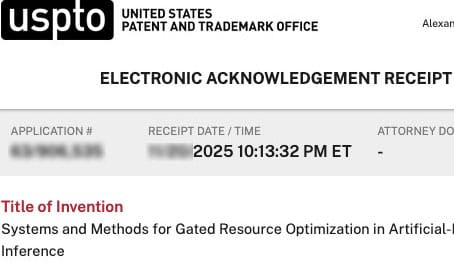

Systems and Methods for Gated Resource Optimization in Artificial-Intelligence Inference

Artificial intelligence systems, including large language models, recommendation engines, vision models, and hybrid multimodal architectures, increasingly operate as general-purpose platforms serving diverse workloads for many different users and organizations. These systems are typically deployed on shared compute infrastructure, such as cloud-based clusters of CPUs, GPUs, and specialized accelerators. As adoption has grown, so has the variety of use cases: low-risk experimentation, casual consumer queries, internal productivity tools, regulated workloads in healthcare and finance, safety-critical applications in transportation and infrastructure, and high-stakes decision support in law and public policy.

See background

Systems and Methods for Automatic, Loss-Less Data Management of Context Windows and Persistent Memory in AI System

!--loss-less-->

Modern artificial intelligence systems, particularly large language models (LLMs), remain fundamentally constrained by how they manage, retain, and retrieve information across time. Although these systems are capable of producing fluent, context-aware output within a single conversation, their ability to sustain continuity, accuracy, and coherence across extended interactions is limited by architectural factors inherited from earlier generations of machine learning systems.

See background

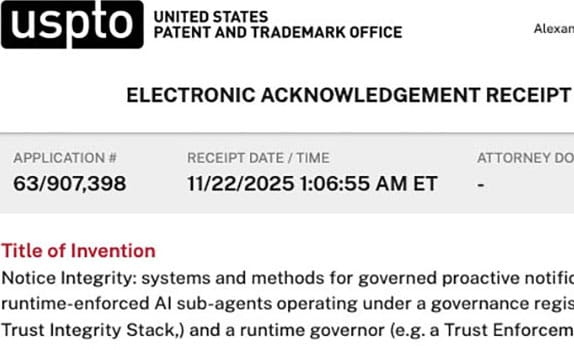

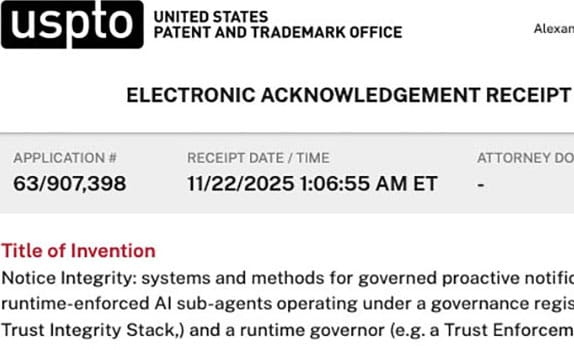

Notice Integrity: systems and methods for governed proactive notification by runtime‑enforced AI sub‑agents operating under a governance registry (e.g., a Trust Integrity Stack) and a runtime governor (e.g., Trust Enforcement)

!--gpn-->

Artificial intelligence systems increasingly engage users across health, finance, education, transportation, home automation, and personal productivity. As these systems shift from reactive tools to autonomous agents capable of anticipating needs, there is a growing requirement for proactive notifications that are not merely triggered—but governed. No matter the domain, the fundamental principle remains: the user should be the administrator of their notifications, except in narrow categories where public‑safety policy or platform‑level safeguards supersede user control.

See background

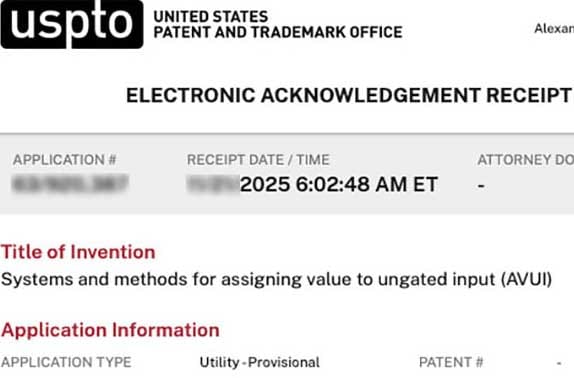

!--avui->

Modern AI systems increasingly rely on large volumes of mixed data: highly structured fields (such as form inputs and database columns) and loosely structured or unstructured “ungated” inputs (such as voice, free-text prompts, uploaded documents, URLs, behavioral signals and contextual logs). In many deployments, the majority of the useful signal about what a user actually needs, what was helpful, and what was harmful is carried in these ungated inputs and their surrounding context.

See background

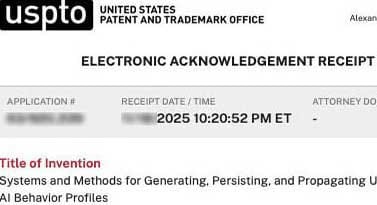

Systems and Methods for Generating, Persisting, and Propagating User-Specific AI Behavior Profiles

The present disclosure relates generally to digital assistants and human–computer interaction, and more specifically to systems and methods for capturing, persisting, and enforcing user preferences and policies governing assistant behavior, including in voice-driven interfaces. Over the past decade, digital assistants have become widely deployed across smartphones, smart speakers, personal computers, vehicles, and other connected devices. Commercial examples include voice-activated assistants integrated into mobile operating systems, cloud-backed smart speakers, in-car infotainment systems, and productivity software helpers.

See background

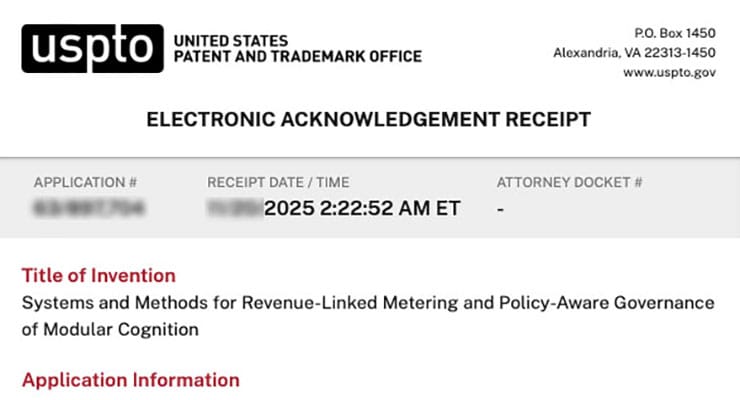

Systems and Methods for Revenue-Linked Metering and Policy-Aware Governance of Modular Cognition

Software and online services have historically relied on a small number of familiar revenue models. Early enterprise software was typically sold as perpetual licenses with maintenance contracts. As delivery moved to the web, subscription models became dominant: customers paid a fixed monthly or annual fee per seat or per account, regardless of exact usage. Consumer-facing platforms often adopted advertising-supported models, in which user attention and data are monetized indirectly through targeted ads. Marketplaces and app stores layered transaction fees, revenue share, and in-app purchases on top of these models.

See background

PersistentMemory: Systems and Methods for Modular Cognition with Vertical Memory and Policy-Aware Context Assembly

Modern large language models (LLMs) and conversational AI systems remain fundamentally limited by the way they handle memory across time. Out of the box, most LLMs operate as stateless systems: they remember only the portion of an interaction that fits within the model’s context window, and all information outside that window is truncated, summarized, or forgotten unless explicitly re-provided by the user. This constraint is inherited from transformer architectures themselves, which require the full input sequence to be represented within a bounded token limit for attention to operate. As a result, the system’s “working memory” resets whenever the context window overflows.

See background

Systems and Methods for Deploying an Attentive Modulator and Dynamic Oversight Layer

Artificial intelligence (AI) systems are increasingly relied upon for a wide range of tasks, from customer support and medical analysis to financial decision-making and software development. As these systems become more complex, and the consequences of erroneous or hallucinated outputs grow more severe, the need for runtime governance mechanisms has become urgent.

See background

Large language models process language within a finite “context window,” also called context length.

The context window is the amount of text, measured in tokens rather than characters or words,

that the model can consider at one time when generating a response.

Industry explainers routinely describe the context window as the model’s short-term memory: all user input, system instructions and recent dialogue must fit inside this window to influence the next output. See background

Large language models process language within a finite “context window,” also called context length.

The context window is the amount of text, measured in tokens rather than characters or words,

that the model can consider at one time when generating a response.

Industry explainers routinely describe the context window as the model’s short-term memory: all user input, system instructions and recent dialogue must fit inside this window to influence the next output. See background

Modern societies depend on complex information systems to make decisions in finance, health care, law, education, transportation, logistics, defense, and everyday life. In all of these domains, there is a long-standing expectation that people and systems will acknowledge uncertainty, limits, or incapacity rather than guess. In human settings, phrases like “I don’t know” or “I can’t do that” signal an explicit boundary: the speaker is refusing to fabricate an answer or perform an action that would be unsafe, misleading, or outside their competence. See background

Modern societies depend on complex information systems to make decisions in finance, health care, law, education, transportation, logistics, defense, and everyday life. In all of these domains, there is a long-standing expectation that people and systems will acknowledge uncertainty, limits, or incapacity rather than guess. In human settings, phrases like “I don’t know” or “I can’t do that” signal an explicit boundary: the speaker is refusing to fabricate an answer or perform an action that would be unsafe, misleading, or outside their competence. See background

The disclosure relates to the field of large-scale software systems, distributed digital services, and artificial intelligence systems that interact with users, enterprises, and regulated environments. Modern digital systems are increasingly complex, interconnected, and governed by overlapping operational, legal, and ethical requirements. As these systems scale, they exhibit failure modes that are subtle, difficult to detect, and capable of causing downstream harm before human operators become aware of the issue. See background

The disclosure relates to the field of large-scale software systems, distributed digital services, and artificial intelligence systems that interact with users, enterprises, and regulated environments. Modern digital systems are increasingly complex, interconnected, and governed by overlapping operational, legal, and ethical requirements. As these systems scale, they exhibit failure modes that are subtle, difficult to detect, and capable of causing downstream harm before human operators become aware of the issue. See background

Artificial intelligence (AI) and machine learning (ML) systems are increasingly embedded in critical domains including healthcare, finance, education, employment, law enforcement, national security, social media, and mental health support. These systems are no longer confined to low‑stakes recommendation tasks; they make or shape decisions that can affect liberty, livelihood, safety, and reputation. As a result, regulators, enterprises, and the public are demanding stronger assurances that AI behavior is trustworthy, governable, and accountable over time. See background

Artificial intelligence (AI) and machine learning (ML) systems are increasingly embedded in critical domains including healthcare, finance, education, employment, law enforcement, national security, social media, and mental health support. These systems are no longer confined to low‑stakes recommendation tasks; they make or shape decisions that can affect liberty, livelihood, safety, and reputation. As a result, regulators, enterprises, and the public are demanding stronger assurances that AI behavior is trustworthy, governable, and accountable over time. See background

Artificial intelligence systems, including large language models, recommendation engines, vision models, and hybrid multimodal architectures, increasingly operate as general-purpose platforms serving diverse workloads for many different users and organizations. These systems are typically deployed on shared compute infrastructure, such as cloud-based clusters of CPUs, GPUs, and specialized accelerators. As adoption has grown, so has the variety of use cases: low-risk experimentation, casual consumer queries, internal productivity tools, regulated workloads in healthcare and finance, safety-critical applications in transportation and infrastructure, and high-stakes decision support in law and public policy. See background

Artificial intelligence systems, including large language models, recommendation engines, vision models, and hybrid multimodal architectures, increasingly operate as general-purpose platforms serving diverse workloads for many different users and organizations. These systems are typically deployed on shared compute infrastructure, such as cloud-based clusters of CPUs, GPUs, and specialized accelerators. As adoption has grown, so has the variety of use cases: low-risk experimentation, casual consumer queries, internal productivity tools, regulated workloads in healthcare and finance, safety-critical applications in transportation and infrastructure, and high-stakes decision support in law and public policy. See background

Modern artificial intelligence systems, particularly large language models (LLMs), remain fundamentally constrained by how they manage, retain, and retrieve information across time. Although these systems are capable of producing fluent, context-aware output within a single conversation, their ability to sustain continuity, accuracy, and coherence across extended interactions is limited by architectural factors inherited from earlier generations of machine learning systems. See background

Modern artificial intelligence systems, particularly large language models (LLMs), remain fundamentally constrained by how they manage, retain, and retrieve information across time. Although these systems are capable of producing fluent, context-aware output within a single conversation, their ability to sustain continuity, accuracy, and coherence across extended interactions is limited by architectural factors inherited from earlier generations of machine learning systems. See background

Artificial intelligence systems increasingly engage users across health, finance, education, transportation, home automation, and personal productivity. As these systems shift from reactive tools to autonomous agents capable of anticipating needs, there is a growing requirement for proactive notifications that are not merely triggered—but governed. No matter the domain, the fundamental principle remains: the user should be the administrator of their notifications, except in narrow categories where public‑safety policy or platform‑level safeguards supersede user control. See background

Artificial intelligence systems increasingly engage users across health, finance, education, transportation, home automation, and personal productivity. As these systems shift from reactive tools to autonomous agents capable of anticipating needs, there is a growing requirement for proactive notifications that are not merely triggered—but governed. No matter the domain, the fundamental principle remains: the user should be the administrator of their notifications, except in narrow categories where public‑safety policy or platform‑level safeguards supersede user control. See background

Modern AI systems increasingly rely on large volumes of mixed data: highly structured fields (such as form inputs and database columns) and loosely structured or unstructured “ungated” inputs (such as voice, free-text prompts, uploaded documents, URLs, behavioral signals and contextual logs). In many deployments, the majority of the useful signal about what a user actually needs, what was helpful, and what was harmful is carried in these ungated inputs and their surrounding context. See background

Modern AI systems increasingly rely on large volumes of mixed data: highly structured fields (such as form inputs and database columns) and loosely structured or unstructured “ungated” inputs (such as voice, free-text prompts, uploaded documents, URLs, behavioral signals and contextual logs). In many deployments, the majority of the useful signal about what a user actually needs, what was helpful, and what was harmful is carried in these ungated inputs and their surrounding context. See background

The present disclosure relates generally to digital assistants and human–computer interaction, and more specifically to systems and methods for capturing, persisting, and enforcing user preferences and policies governing assistant behavior, including in voice-driven interfaces. Over the past decade, digital assistants have become widely deployed across smartphones, smart speakers, personal computers, vehicles, and other connected devices. Commercial examples include voice-activated assistants integrated into mobile operating systems, cloud-backed smart speakers, in-car infotainment systems, and productivity software helpers. See background

The present disclosure relates generally to digital assistants and human–computer interaction, and more specifically to systems and methods for capturing, persisting, and enforcing user preferences and policies governing assistant behavior, including in voice-driven interfaces. Over the past decade, digital assistants have become widely deployed across smartphones, smart speakers, personal computers, vehicles, and other connected devices. Commercial examples include voice-activated assistants integrated into mobile operating systems, cloud-backed smart speakers, in-car infotainment systems, and productivity software helpers. See background

Software and online services have historically relied on a small number of familiar revenue models. Early enterprise software was typically sold as perpetual licenses with maintenance contracts. As delivery moved to the web, subscription models became dominant: customers paid a fixed monthly or annual fee per seat or per account, regardless of exact usage. Consumer-facing platforms often adopted advertising-supported models, in which user attention and data are monetized indirectly through targeted ads. Marketplaces and app stores layered transaction fees, revenue share, and in-app purchases on top of these models. See background

Software and online services have historically relied on a small number of familiar revenue models. Early enterprise software was typically sold as perpetual licenses with maintenance contracts. As delivery moved to the web, subscription models became dominant: customers paid a fixed monthly or annual fee per seat or per account, regardless of exact usage. Consumer-facing platforms often adopted advertising-supported models, in which user attention and data are monetized indirectly through targeted ads. Marketplaces and app stores layered transaction fees, revenue share, and in-app purchases on top of these models. See background

Modern large language models (LLMs) and conversational AI systems remain fundamentally limited by the way they handle memory across time. Out of the box, most LLMs operate as stateless systems: they remember only the portion of an interaction that fits within the model’s context window, and all information outside that window is truncated, summarized, or forgotten unless explicitly re-provided by the user. This constraint is inherited from transformer architectures themselves, which require the full input sequence to be represented within a bounded token limit for attention to operate. As a result, the system’s “working memory” resets whenever the context window overflows. See background

Modern large language models (LLMs) and conversational AI systems remain fundamentally limited by the way they handle memory across time. Out of the box, most LLMs operate as stateless systems: they remember only the portion of an interaction that fits within the model’s context window, and all information outside that window is truncated, summarized, or forgotten unless explicitly re-provided by the user. This constraint is inherited from transformer architectures themselves, which require the full input sequence to be represented within a bounded token limit for attention to operate. As a result, the system’s “working memory” resets whenever the context window overflows. See background

Artificial intelligence (AI) systems are increasingly relied upon for a wide range of tasks, from customer support and medical analysis to financial decision-making and software development. As these systems become more complex, and the consequences of erroneous or hallucinated outputs grow more severe, the need for runtime governance mechanisms has become urgent. See background

Artificial intelligence (AI) systems are increasingly relied upon for a wide range of tasks, from customer support and medical analysis to financial decision-making and software development. As these systems become more complex, and the consequences of erroneous or hallucinated outputs grow more severe, the need for runtime governance mechanisms has become urgent. See background