An open letter to Sundar Pichai: Gemini 3 hallucinates 88% – WTF?

Dear Sundar,

You should be winning this.

Gemini 3 is widely regarded as the strongest reasoning model in the field. On benchmarks, on logic, on structured problem-solving, Google has finally reclaimed technical superiority.

WTF: 6 AI giants, 6 giant messes

You also control the largest distribution engine in human history. Search. Android. Chrome. YouTube. Workspace. A global pipe you can open or close at will.

On paper, that combination should be unbeatable.

But it won’t deliver what you really need: adoption.

You can have all the reasoning in the world, but if you can’t remember reliably, share governance with users, or make money fairly and accurately, you’ve built a system that sounds impressive and fails where it matters.

That failure has already happened once.

Gemini’s first launch damaged trust. Users tried it, encountered confident errors and hallucinations, and walked away. That first rejection matters more than most product teams admit. It is extraordinarily difficult to persuade users to return after a failed start. You don’t get a second chance to make a first impression — let alone a third.

By embedding Gemini directly into Chrome, Google is betting that exposure will fix AI’s adoption problem. That if Gemini is everywhere, users will finally embrace it.

This misunderstands why adoption has stalled across all LLMs.

Users are not abandoning AI because they can’t find it. They’re abandoning it because their early experiences are bad. Confident answers that are wrong. Summaries that miss the point. Hallucinations delivered without hesitation or warning.

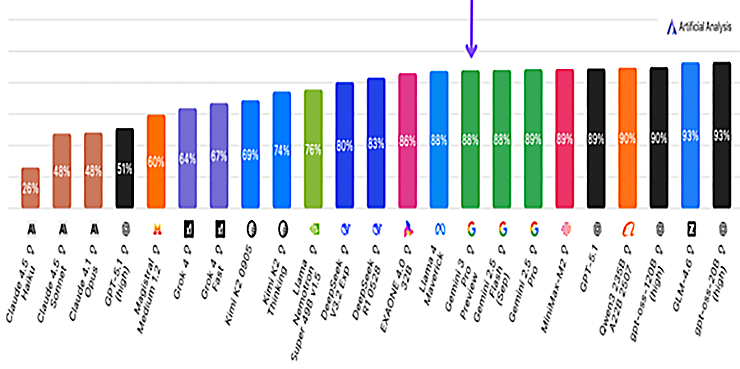

Despite major upgrades in Gemini 3 — including better coding, long-context reasoning, and flashy multimodal output — the model still hallucinates at alarming rates. Independent benchmarking shows it fabricates answers in 88% of uncertain cases, rather than saying “I don’t know.” The performance is impressive, but the ego is dangerous.

Artificial Analysis Omniscience Hallucination Rate

Omniscience Hallucination Rate (lower is better) measures how often the model answers incorrectly when it should have refused, defined as the proportion of wrong answers out of all non-correct attempts. Gemini 3.0 is 88%

That’s not a distribution problem. It’s a credibility problem.

In fact, making AI more visible accelerates rejection. When a tool fails after you seek it out, you shrug. When it fails after being pushed into your primary workflow, you blame the system.

Distribution won’t repair trust. It will magnify its absence.

And now, Google is conducting a global beta — a massive, public rollout of AI without users participating in governance, without an accurately metered revenue model and without 100 percent loss-less memory.

It’s either a Hail Mary to save Gemini…

Or a reckless gamble from a company that should know better.

Which brings us to the three problems Gemini must solve before adoption is even possible:

1. Memory

LLMs survive their memory limits the same way JPEGs survived slow networks: through lossy compression.

JPEGs throw away pixels. LLMs throw away facts.

At first glance, the loss isn’t obvious. But look closely and the seams appear: blurred edges, missing detail, artifacts that weren’t visible at first. With LLMs, those artifacts are missing facts and broken continuity.

What JPEGs lose are pixels.

What LLMs lose is truth.

Without 100% loss-less memory, AI cannot be trusted. Without trust, there is no adoption. Without adoption there is no scale. And without scale, the market caps tied to AI infrastructure evaporate.

If you believe memory is a problem you can solve later, please know that a solution to this problem has been filed and is patent pending.

2. Governance

Enterprises will not adopt systems they cannot control. And users need agency as well:

Over how AI behaves, when it escalates, when it refuses and how it explains itself. They need visibility, constraint and the ability to govern outcomes rather than react to them after failure.

Right now, governance is implicit, opaque and centralized. That is tolerable for demos. It is unacceptable for real work.

Joni Mitchell never accepted an instrument as it was handed to her. She tuned it — again and again — until it matched the sound she heard in her heart. She custom-tuned her guitar for many of her songs, including “California.”

Governance in AI should work the same way: not as control imposed from above, but as user-level tuning that lets people shape how the system behaves, remembers and responds.

AI systems that do not give users control will be treated as toys, not tools.

If you believe governance is a problem you can solve later, please know that a solution to this problem has been filed and is patent pending.

3. Revenue

This is the problem the industry keeps avoiding.

Flat-rate pricing does not scale at the enterprise level. Token-based billing does not measure cost. Tokens measure words. Words are an inaccurate proxy for compute.

Consider these two scenarios

A user talks to AI for thirty minutes about his girlfriend:

How she seems distant.

How she is slow to respond to texts.

How she is mysteriously unavailable.

The system dutifully transcribes every word, responds empathetically and consumes a massive number of tokens — all while avoiding the four words a human would scream immediately: SHE’S CHEATING ON YOU!

Now consider a three-word query:

“Is God real?”

Few questions demand more reasoning, context, philosophy and depth. Yet under token-based billing, that interaction may never recover the cost of compute.

That alone should end the debate over billing.

Tokens are not compute. They are a proxy—and a inaccurate one. If you want to bill for cost, you must meter compute.

And if you believe compute-based metering is something you can defer, please know this:

It’s not impossible. It’s patent pending.

Sundar, you solved the hard part. You skipped the essential part.

Better reasoning and broader distribution will not overcome broken memory, absent governance and a revenue model untethered from reality. If Gemini fails again in the wild, there may not be another chance to reset user trust.

AI adoption will not be won by pushing harder.

It will be won by earning trust the hard way.

Right now, Google is running faster — with scissors.